Geminimcpserver

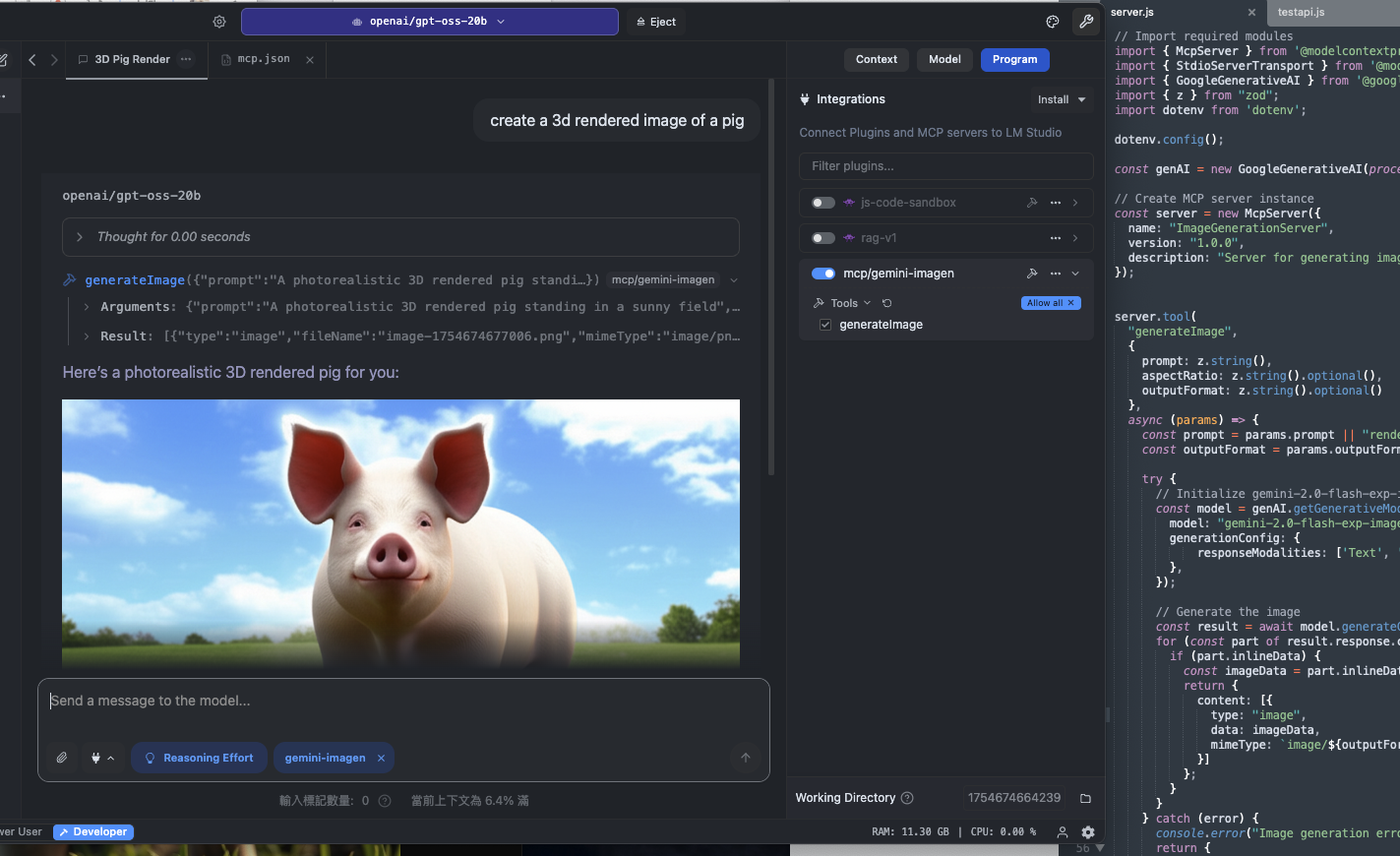

GeminiMcpServer is an MCP server that connects LM Studio with the Google Gemini API, supporting image generation and multimodal task processing.

rating : 2 points

downloads : 11.0K

What is GeminiMcpServer?

GeminiMcpServer is a bridge service that allows you to directly use the powerful image generation and multimodal processing capabilities of Google Gemini in AI tools such as LM Studio.How to use GeminiMcpServer?

Simply install and configure it, and you can call the advanced functions of Google Gemini in your familiar AI tools.Applicable scenarios

Scenarios that require image generation and combined text - image processing, such as creative design, content creation, and multimodal AI application development.Main features

Full MCP support

Seamlessly integrate with MCP clients such as LM Studio

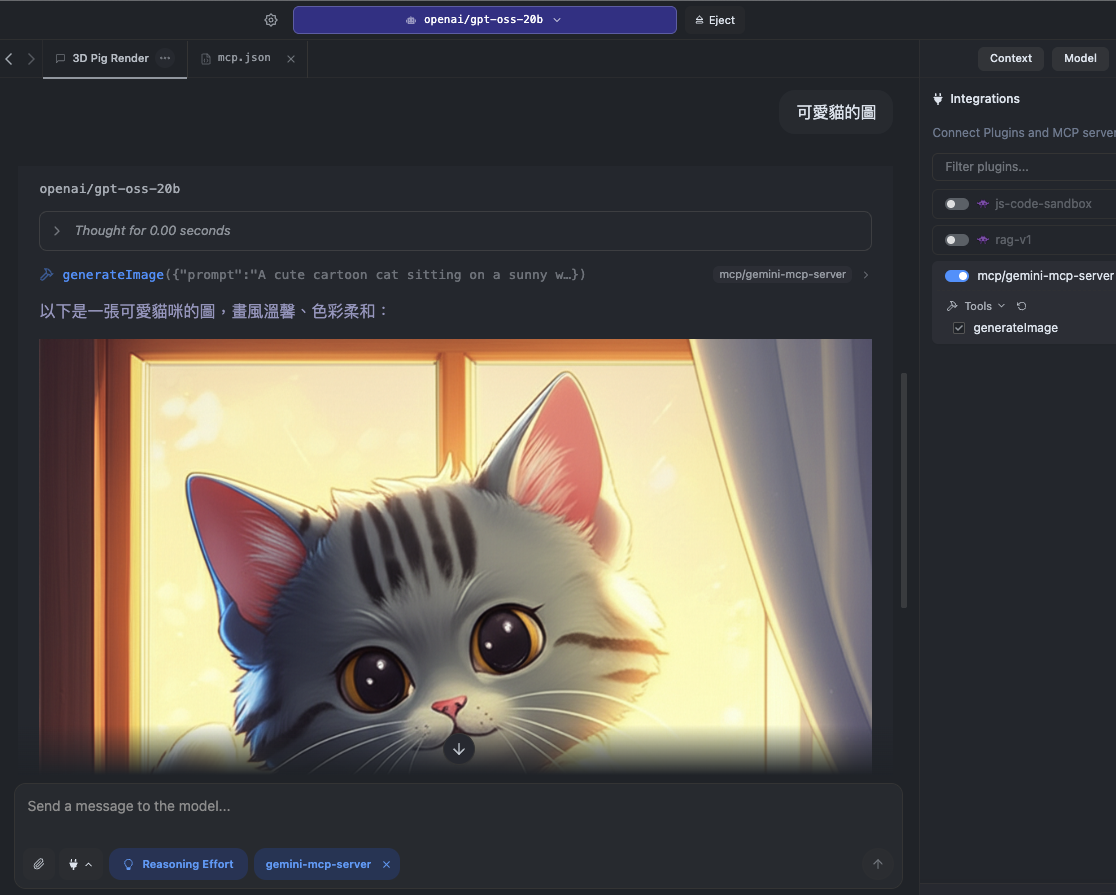

Image generation

Generate high - quality pictures using the Gemini 2 model

Multimodal input

Simultaneously process text and image input (optional feature)

Local + cloud hybrid

Run LM Studio locally and use the Gemini API in the cloud

Advantages

Use Gemini functions in LM Studio without development

Obtain powerful cloud capabilities while retaining local AI processing

Simple installation and configuration process

Limitations

Requires a Google API Key (may require payment)

Depends on network connection to access the Gemini service

The image generation function is currently in the experimental stage

How to use

Installation preparation

Ensure that the Node.js v20 environment is installed

Get the API key

Obtain the Gemini API Key from Google AI Studio

Install the service

Clone the repository and install dependencies

Configure the environment

Set the API key in the.env file

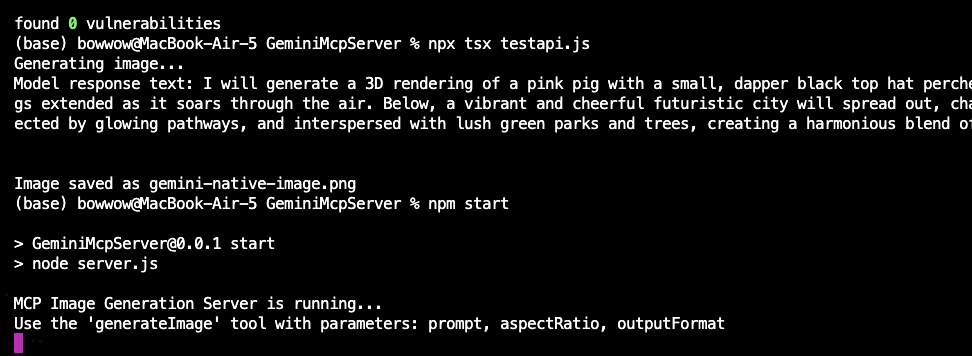

Start the service

Run the server

Usage examples

Image generation

Generate a picture in a specified style through LM Studio

Multimodal analysis

Analyze text and image content simultaneously

Frequently Asked Questions

Is it necessary to pay for use?

Which clients are supported?

Which formats are supported for image generation?

How to confirm that the service is running normally?

Related resources

Google AI Studio

Apply for an API key

LM Studio official website

Download the MCP client

GitHub repository

Project source code

Notion Api MCP

Certified

A Python-based MCP Server that provides advanced to-do list management and content organization functions through the Notion API, enabling seamless integration between AI models and Notion.

Python

17.8K

4.5 points

Markdownify MCP

Markdownify is a multi-functional file conversion service that supports converting multiple formats such as PDFs, images, audio, and web page content into Markdown format.

TypeScript

30.9K

5 points

Gitlab MCP Server

Certified

The GitLab MCP server is a project based on the Model Context Protocol that provides a comprehensive toolset for interacting with GitLab accounts, including code review, merge request management, CI/CD configuration, and other functions.

TypeScript

21.6K

4.3 points

Duckduckgo MCP Server

Certified

The DuckDuckGo Search MCP Server provides web search and content scraping services for LLMs such as Claude.

Python

61.5K

4.3 points

Figma Context MCP

Framelink Figma MCP Server is a server that provides access to Figma design data for AI programming tools (such as Cursor). By simplifying the Figma API response, it helps AI more accurately achieve one - click conversion from design to code.

TypeScript

57.1K

4.5 points

Unity

Certified

UnityMCP is a Unity editor plugin that implements the Model Context Protocol (MCP), providing seamless integration between Unity and AI assistants, including real - time state monitoring, remote command execution, and log functions.

C#

27.8K

5 points

Gmail MCP Server

A Gmail automatic authentication MCP server designed for Claude Desktop, supporting Gmail management through natural language interaction, including complete functions such as sending emails, label management, and batch operations.

TypeScript

18.8K

4.5 points

Minimax MCP Server

The MiniMax Model Context Protocol (MCP) is an official server that supports interaction with powerful text-to-speech, video/image generation APIs, and is suitable for various client tools such as Claude Desktop and Cursor.

Python

42.0K

4.8 points